-

Okay, here's a 🧵 on how I've used single-question asychronous quizzes since Fall 2020. @drspoulsen/1452748576052981761

-

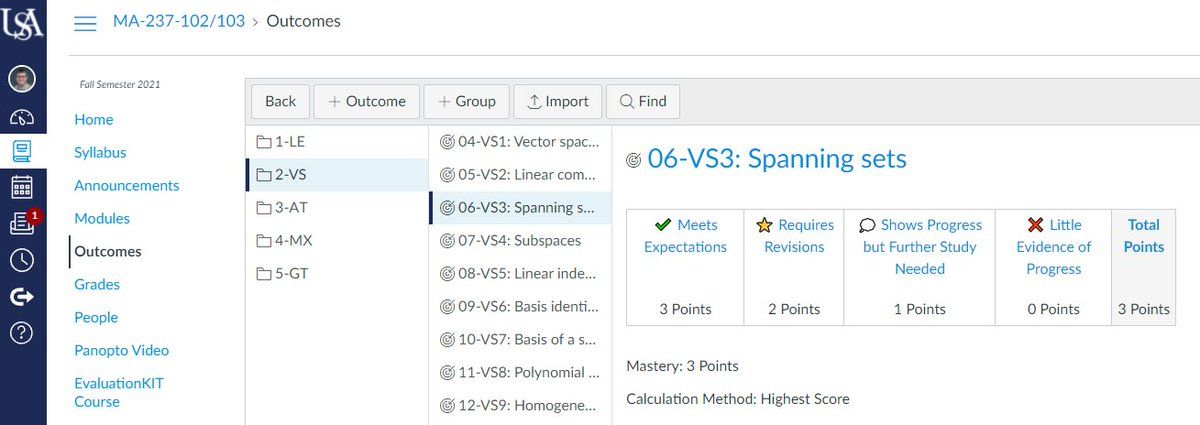

First, the course is divided into well-defined learning outcomes, shout outs @Grading4Growth. In @CanvasLms you can represent these as "Outcomes".

-

If you're using a textbook an outcome is approximately a section (in the LA book I maintain with @siwelwerd et al it's exactly a section), but use your judgement - each outcome will have one type of exercise used to measure it.

-

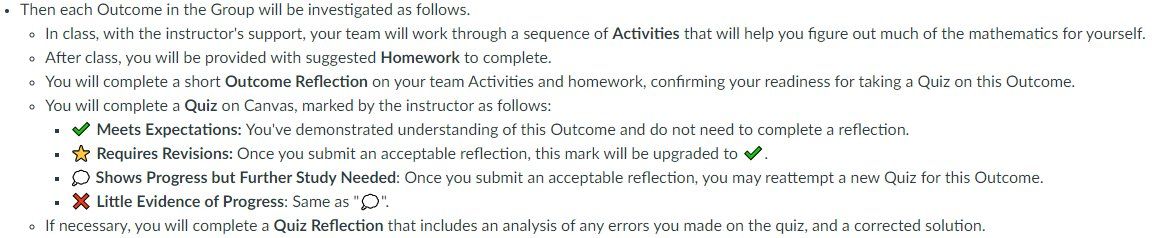

The quiz/reflection/quiz loop continues until a student successfully "✔️ Meets Expectations".

-

At the end of the semester, course grades largely consider how many ✔️s the student has earned. This semester, I've decided to also consider the result of a proctored in-person final exam, along with a Final Reflection where each student makes a case for their letter grade.

-

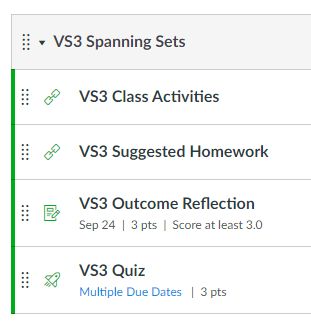

The outcome reflections are available once we've wrapped discussion of the outcome in class. Then the quiz is due within 1-2 weeks (really 2-3 weeks before access in Canvas is locked out, but they can request extensions thru end of semester).

-

I grade all the quiz submissions I have two or three days out of the week. This is pretty quick work; I don't obsess over how many points a question gets, and it's pretty easy to see if a student "gets it" or not. If they get it, then they fix minor errors; else they try again.

-

So grading isn't bad. But where do all these quizzes come from? Well, I wrote an app for that. checkit.clontz.org/

-

By authoring CheckIt question templates and the logic generating the random elements for each learning outcome, I can produce (1) a website with suggested homework along with answers, (2) PDF printable quizzes, and (3) export random questions to LMS (Canvas/D2L/Moodle).

-

Here's an example. teambasedinquirylearning.github.io/checkit-tbil-la/#/bank/V3/1/ So with hundreds of random questions available, each student gets a different version (probabilistically). But they all measure the same thing, and despite random values I really am looking at the explanations students provide.

-

So @CheckItProblems provides the "final answer" which I can compare with the student's solution, and if those match up (or not) I can follow the logic of their solution to ensure that they not only can produce the right answer, but also show understanding of why it's right.

-

I could ramble more but I'll end the thread here - let me know if you have questions. @CheckItProblems can be run in a web browser github.com/StevenClontz/checkit-platform/#dashboard to either generate custom random exercises or author new exercises. I hope to make a new release Dec, even easier to use.